Rainforest QA

Rainforest QA builds browser-based developer tools that help teams ship quickly with confidence, by blending no-code test authoring with AI-powered automation.

I joined as the first designer following a major reorganization that pivoted the company from enterprise sales to product-led growth. This shift required a leaner structure without dedicated Product Managers, which meant designers were empowered to drive both product strategy and execution.

During this time I also stepped into my first leadership role as Director of Design, guiding a team of three designers and establishing a culture where design wasn't just about delivery, but central to defining the product itself.

Customer Discovery

After joining, I noticed several recent releases hadn't resonated with customers. Despite building technically sophisticated features, we were missing the mark on what users actually needed. This gap signaled we had drifted from understanding their day-to-day challenges.

I flagged this to the founders, proposing we pause feature development to conduct focused customer discovery. The goal was to rebuild our understanding of customer workflows before investing further in the wrong solutions.

Partnering with the CTO and a Customer Success Manager, we interviewed three key cohorts over two weeks: potential customers, active users, and those who had churned.

While doing so, we gathered feedback on two critical areas: the marketing website (our primary growth engine) and the test authoring experience (the product's core value).

Research from 30 interviews uncovered a wealth of insights, from confusing user flows to critical bugs. While painful to hear, the feedback reignited the team's passion for building a product we had strong conviction for.

The feedback was significant enough to reshape our entire roadmap, shifting the next six months of work to address the critical issues we'd uncovered.

Test Authoring

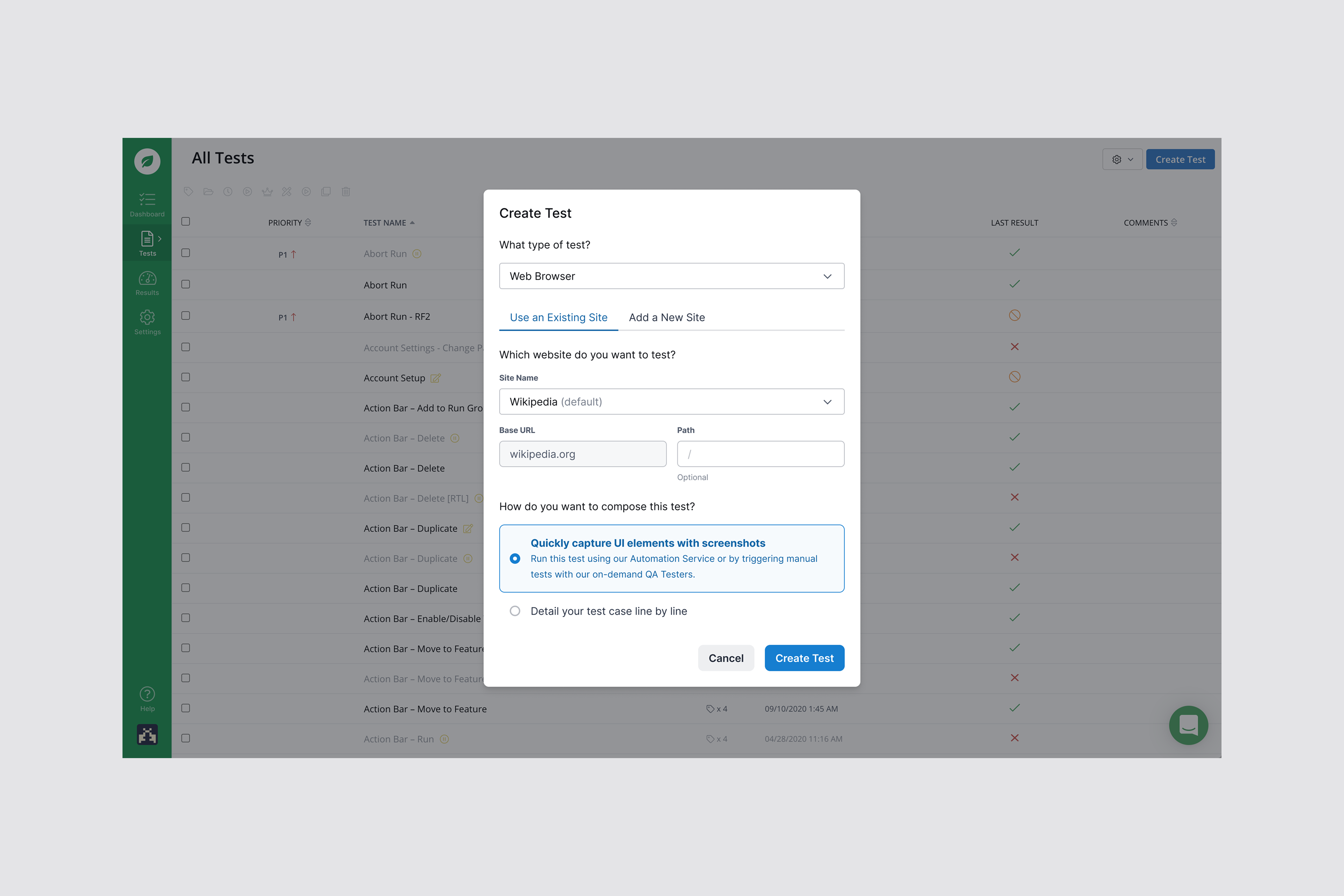

To serve teams without dedicated QA engineers, we needed a faster path from idea to executable test. Working alongside engineers, I led the design team through a comprehensive redesign spanning 12 months of iterative improvements.

The goal was to simplify the test writing experience to help new users see value sooner, while helping everyday customers write tests that were significantly more resilient to failure.

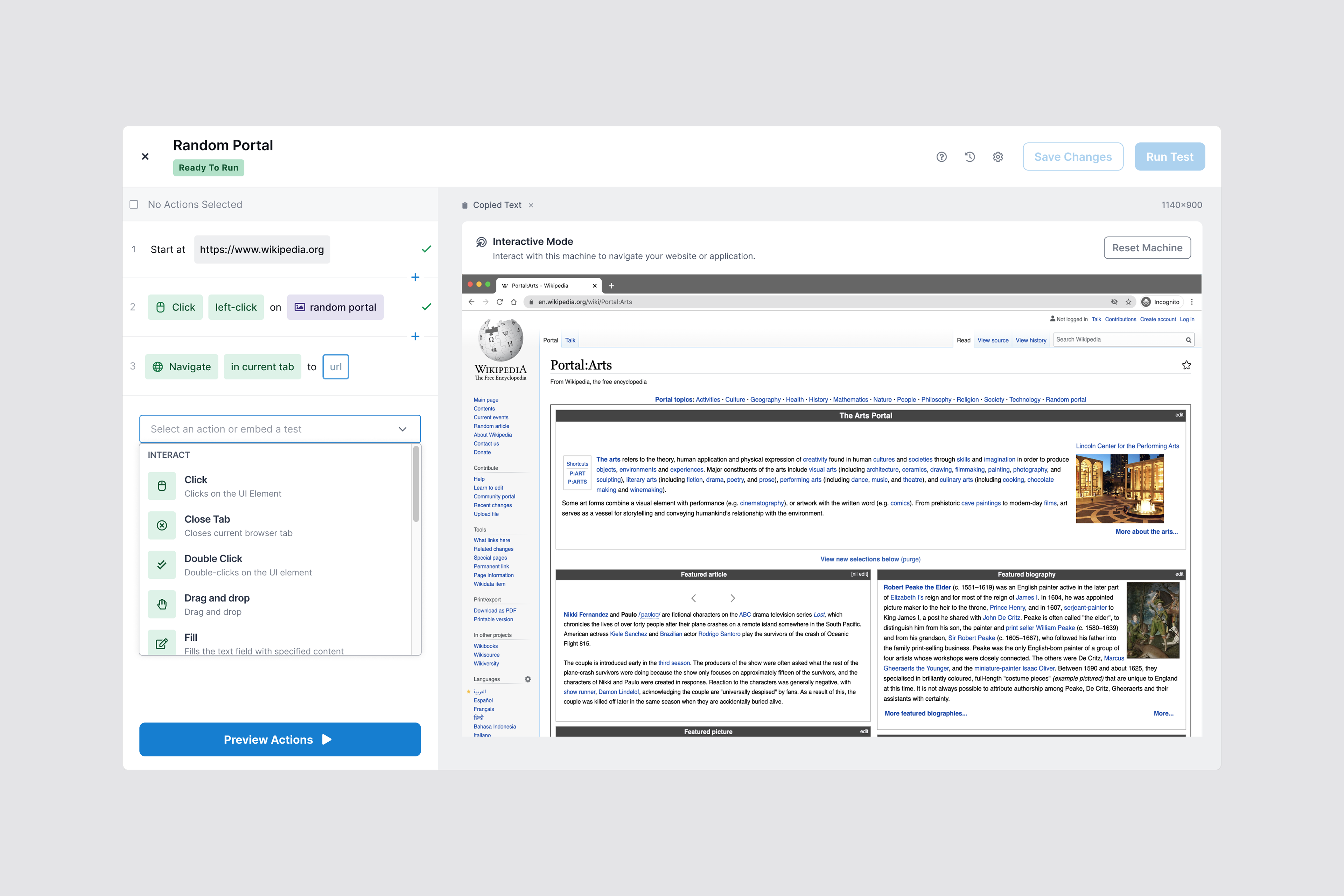

We started by rethinking the core interaction model. Using the project's natural language interface, we established a clear taxonomy of "actions" a user could instruct the browser to take.

This enabled users to write tests that read like user stories, directly in a live browser. It lowered the barrier to entry, ensuring that anyone, even non-technical staff, could understand and contribute to the test suite.

To prevent duplication, we introduced a modular component system. Users could group frequent actions, such as "Login" or "Checkout", into reusable steps. This created a shared library across teams, meaning a single update to one test would instantly propagate to every other test, drastically reducing maintenance overhead.

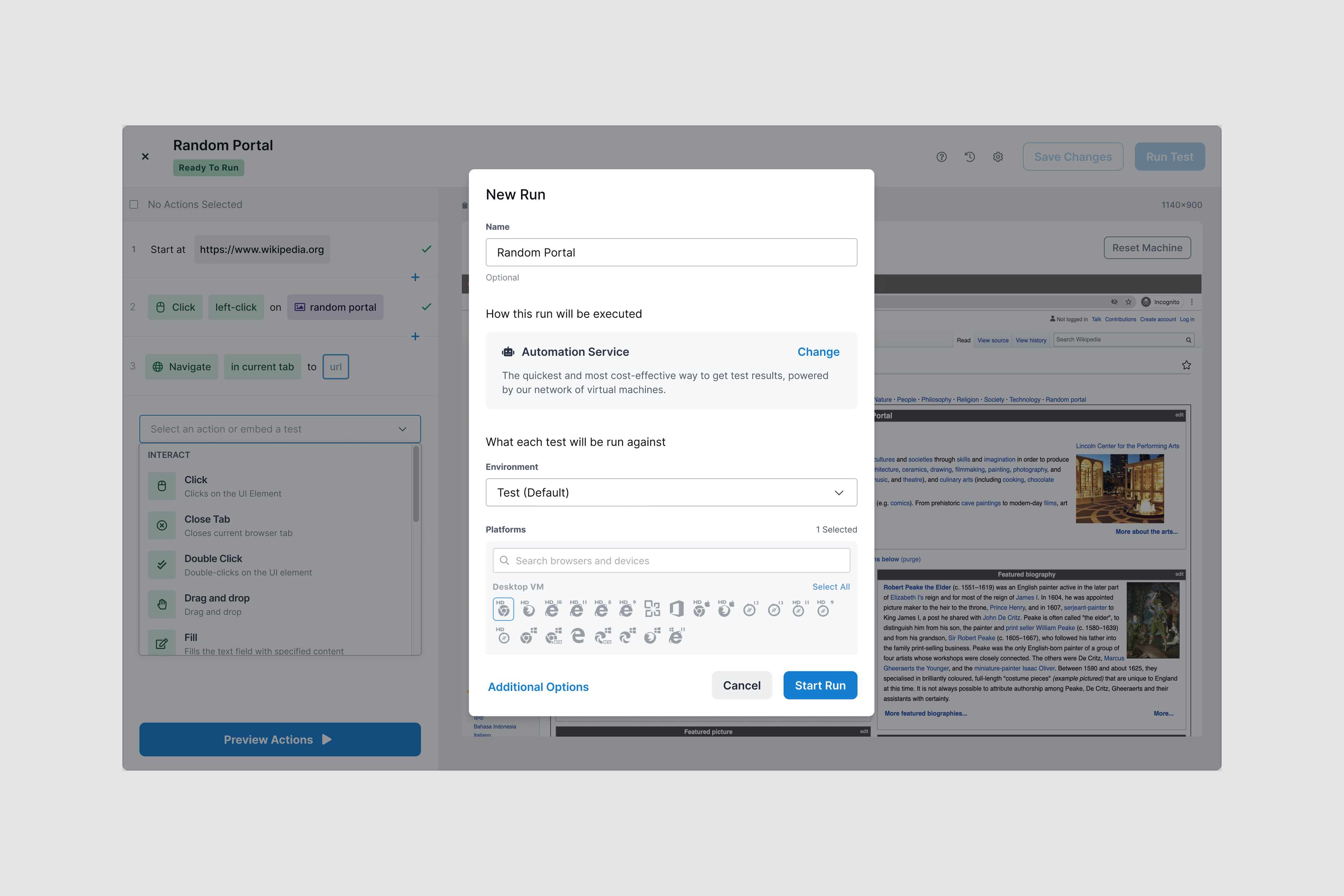

Lastly, we clarified the execution process. Users needed to understand how their tests would run: whether through automated AI agents or real people from our crowdsourced tester pool.

By surfacing execution modes alongside their costs, we empowered teams to balance speed, human insight, and budget based on each test's importance rather than defaulting to the most expensive option.

Idea Evaluation

Ideas arrived rapidly from every direction: leadership, engineering, sales, and support, each convinced their proposal should be prioritized. Without a structured evaluation process, teams frequently paused work to debate new possibilities, diluting focus and slowing progress.

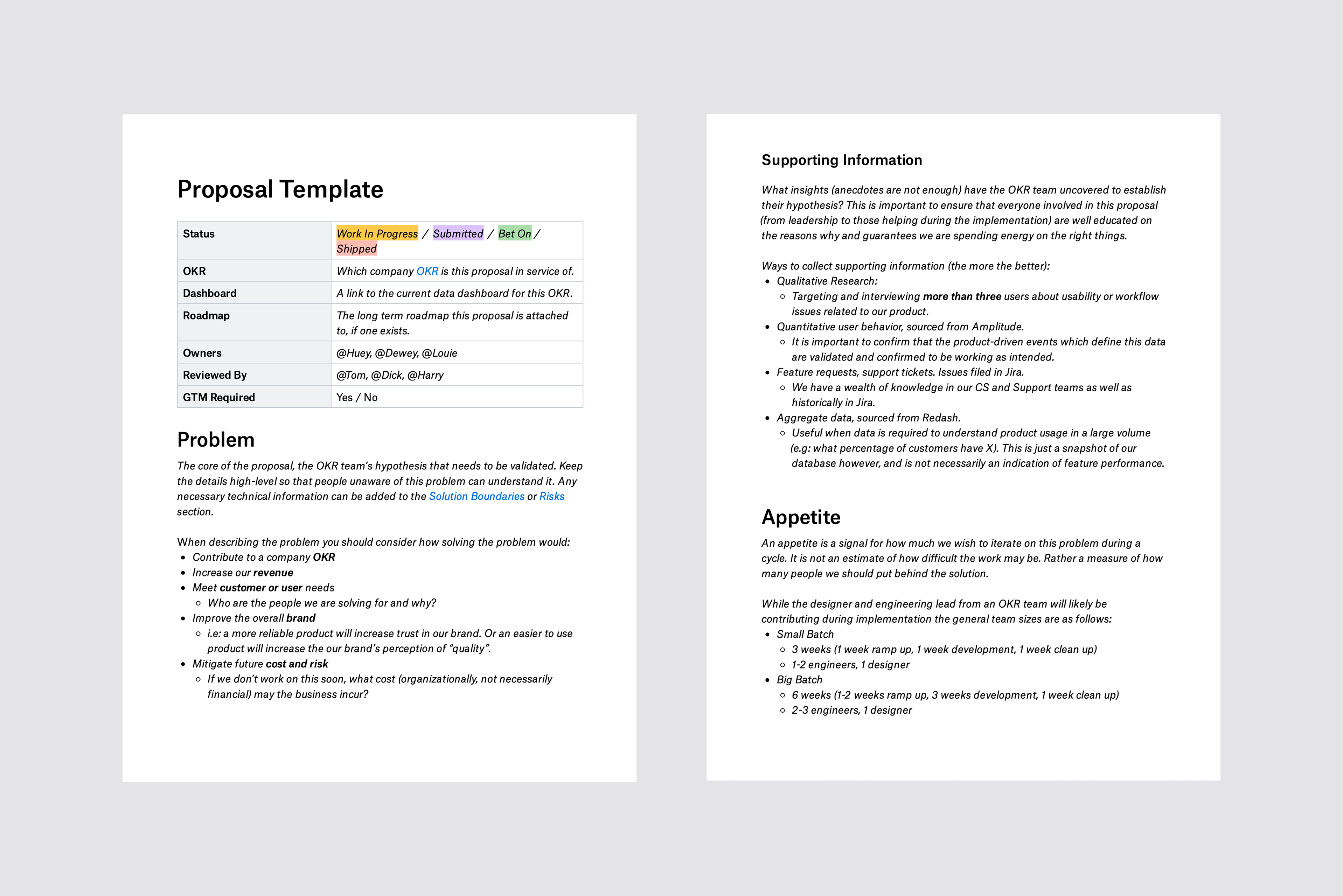

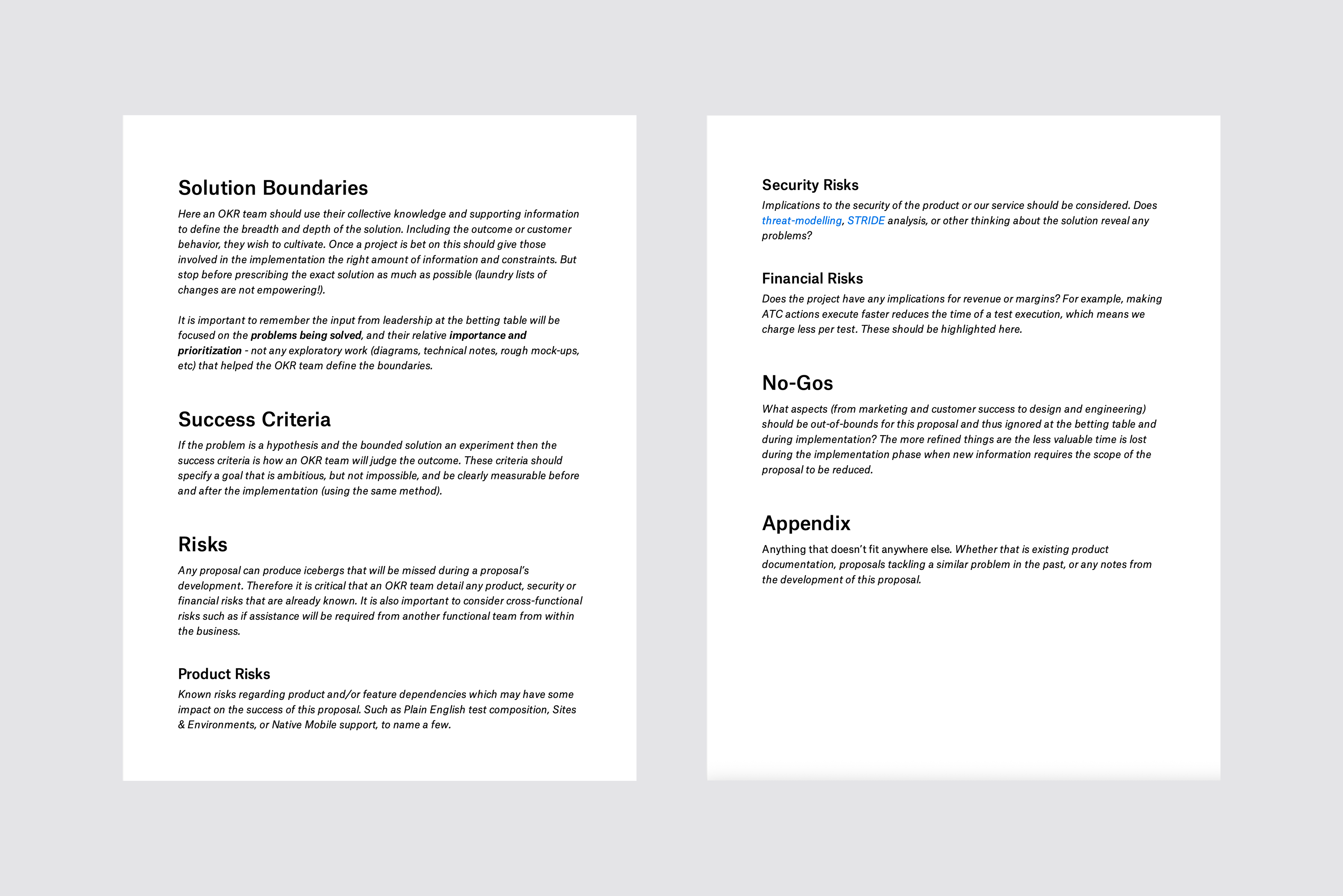

To manage this, I partnered with the Director of Engineering to establish an evaluation framework inspired by Basecamp's "Shape Up" methodology. Anyone proposing work would complete a structured brief addressing customer impact, business value, and success criteria before the team committed.

This forced proposers to articulate why their idea mattered, who it served, and how we'd measure success. These questions often revealed weak proposals early.

Crucially, it gave leadership transparency into the goals of committed work, allowing them to contribute insights or veto initiatives that strayed from the vision.

Additionally, in an organization without a dedicated product managers, it distributed product thinking across the company, ensuring everyone considered the “why” behind their work, not just the “how.”